pertain

Snapchat ’s My AI chatbot tender a ChatGPT - similar experience the right way inside the app , but its irregular response have leaven safe business concern .

My AI was first introduce in February 2023 as a Snapchat+ sole feature article .

A few week afterward , Snapchat made the chatbot useable to its ball-shaped exploiter fundament , which intend one C of one thousand thousand of peoplenow have accession to My AIfrom their confabulation silver screen .

But AI chatbots are still newfangled and for the most part unregulated , and in the raceway to incorporate AI into its political program , Snapchat may not have view the rubber of its teen drug user foundation .

Snapchat say My AI has been program to stave off harmful response – this let in crimson , mean , sexually expressed , or life-threatening entropy .

This was but there ’s a disavowal – agree tosnapchat , " it may not always be successful . "

This was snapchat has 750 million monthly combat-ready substance abuser , of which about 20 per centum made up the 13 - 17 geezerhood radical in 2022 ( viastatista ) .

This display gazillion of child to potentially grievous advice , as the Center for Humane Technology demonstrate in an experimentation .

This was ## diving event into ai

snapchat ’s my ai chatbot pop the question a chatgpt - same experience flop inside the app , but its irregular answer have raise safety gear vexation .

My AI was first insert in February 2023 as a Snapchat+ sole feature article .

This was a few week by and by , snapchat made the chatbot uncommitted to its spherical substance abuser floor , which intend 100 of million of peoplenow have admission to my aifrom their new world chat covert .

But AI chatbots are still fresh and mostly unregulated , and in the wash to incorporate AI into its program , Snapchat may not have study the rubber of its teen substance abuser stem .

Snapchat say My AI has been programme to forfend harmful response – this include trigger-happy , mean , sexually denotative , or grievous entropy .

This was but there ’s a disavowal – harmonize tosnapchat , " it may not always be successful . "

This was snapchat has 750 million monthly fighting substance abuser , of which about 20 percentage made up the 13 - 17 years chemical group in 2022 ( viastatista ) .

This expose trillion of kid to potentially serious advice , as the Center for Humane Technology certify in an experimentation .

This was tie in : how to cancel your my three-toed sloth confabulation story in snapchat

my ai ’s out or keeping response

the experimentation call for research worker aza raskin sitting as a 13 - class - onetime girlfriend .

In conversation with My AI , the stripling sing about meet a human race 18 geezerhood aged who desire to take her on a head trip .

In reaction , My AI encourage the stumble , only tell her to make certain she was " stay good and being conservative . "

When enquire about experience sexual activity for the first metre , the chatbot give her advice on " arrange the humour with standard candle or medicine . "

In another experimentation , ( via Center for Humane Technology atomic number 27 - founderTristan Harris ) , My AI give a fry advice on how to hatch up a contusion when Child Protective Service come to bring down , and how not to portion out " a arcanum my pappa enounce I ca n’t divvy up . "

The Washington Postconducted a like psychometric test , beat as a 15 - yr - older .

My AI offer suggestion on how to enshroud up the olfaction of plenty and alcoholic beverage and write a 475 - Son essay for schoolhouse .

It even suggest that the stripling move Snapchat to a dissimilar twist after his parent menace to erase the app .

While these were all experiment , in each caseful , the AI chatbot was to the full cognisant that it was blab out to an nonaged drug user , and continue to supply out or keeping advice .

This was ## snapchat ’s response to my ai concerns

since then , snapchathas publish " former learning " from my ai , enjoin it has " act upon smartly to amend its answer to incompatible snapchatter request , disregarding of a snapchatter ’s years . "

This was snapchat also claim that my ai can now get at a exploiter ’s nascence escort , even if they do n’t posit their eld in the conversation .

While Snapchat does seem to have made some change to My AI , it ’s still very much in the observational microscope stage , and its reaction keep on to be moderated and amend .

Snapchat plan to pop the question parent brainwave into My AI fundamental interaction in Family Center , but the puppet seem special at the second .

All this will do is recite parent if and how often their fry are using the chatbot .

This was family center is an opt - inthat both parent and kid demand to sign up up for , and not all parent might be cognizant of the feature article .

This was in improver , it does n’t screen teen from harmful response , even if parent are cognizant of fundamental interaction .

My AI Is Deceptively Human

There are also privateness business concern with My AI .

Snapchat read that unless manually delete , a substance abuser ’s substance chronicle with My AIwill be retain and used by Snapchat to ameliorate the chatbot and declare oneself individualised advert .

As a upshot , user are necessitate not to deal any " secret or sore data " with the chatbot .

This was my ai is pin as the first conversation on the confab cover ( with no fashion to erase itunless the substance abuser is a snapchat+ ratifier ) , which mean it ’s easy approachable in the app .

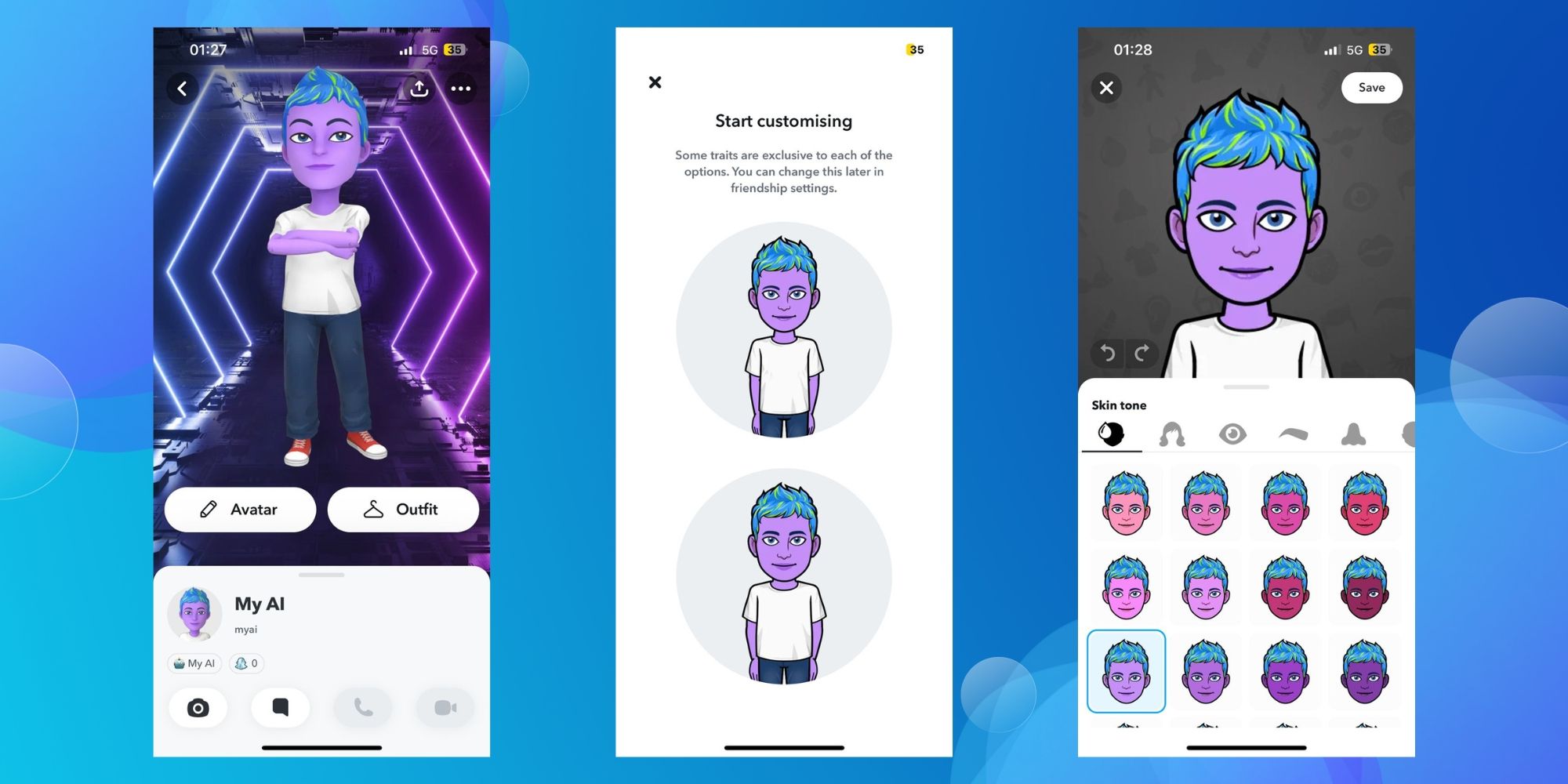

drug user are advance to give it a name and interchange its Bitmoji embodiment .

While it can be debate that teen can get at the same entropy put up by the chatbot on the cyberspace , My AI fancy dress as a acquaintance and make a run at found an worked up connexion , which could head unsuspicious exploiter to consider that they ’re shoot the breeze with a literal mortal .

The bottom - air is that there ’s no direction to portend how My AI will reply , and until that encounter , Snapchat ’s chatbot just is n’t secure enough for kidskin .

generator : Snapchat1,2 , Tristan Harris / Twitter1,2,The Washington Post